Deploying machine learning models on the edge isn’t just a cool idea anymore it’s a must for low-latency, private, and offline-friendly applications. But here’s the catch: edge environments are messy. Devices differ in hardware, operating systems, compute power, and even the kind of neural accelerators they have.

That’s why choosing the right deployment platform is crucial. In this guide, I’ll walk you through the top edge ML deployment platforms I’ve personally worked with or evaluated what they’re best for, their pros and cons, and practical tips to make them work efficiently in production.

Let’s start with why edge deployment matters before jumping into the best tools available.

Why Deploy ML on Edge Devices?

Edge deployment means running your ML model directly on the device whether it’s a smartphone, Raspberry Pi, Jetson Nano, or even a microcontroller.

Here’s why it’s becoming so important:

- Lower Latency: No cloud round-trips. Predictions happen locally, often in milliseconds.

- Better Privacy: Sensitive data never leaves the device perfect for healthcare, IoT, and personal assistants.

- Offline Functionality: Apps work even without network connectivity.

- Reduced Cloud Costs: Less dependency on expensive cloud inference.

- Energy and Bandwidth Efficiency: Especially useful in large-scale IoT networks or remote areas.

Now, while that all sounds great, edge devices bring strict constraints limited compute, smaller memory, power limitations, and different hardware accelerators. That’s why the right deployment framework can make or break your success.

How I Evaluate Edge ML Platforms

Before we go through the list, here’s my personal checklist for evaluating edge ML deployment platforms:

- Hardware Support: Does it work on CPU, GPU, NPU, or microcontrollers?

- Model Compatibility: Can it handle TensorFlow, PyTorch, or ONNX models easily?

- Performance: Latency and throughput can it handle real-time inference?

- Memory & Size: How small can the runtime get without losing accuracy?

- Optimization Features: Quantization, pruning, and operator fusion.

- Ease of Deployment: Conversion tools, documentation, and build integration.

- Community & Ecosystem: Is there active support and real-world examples?

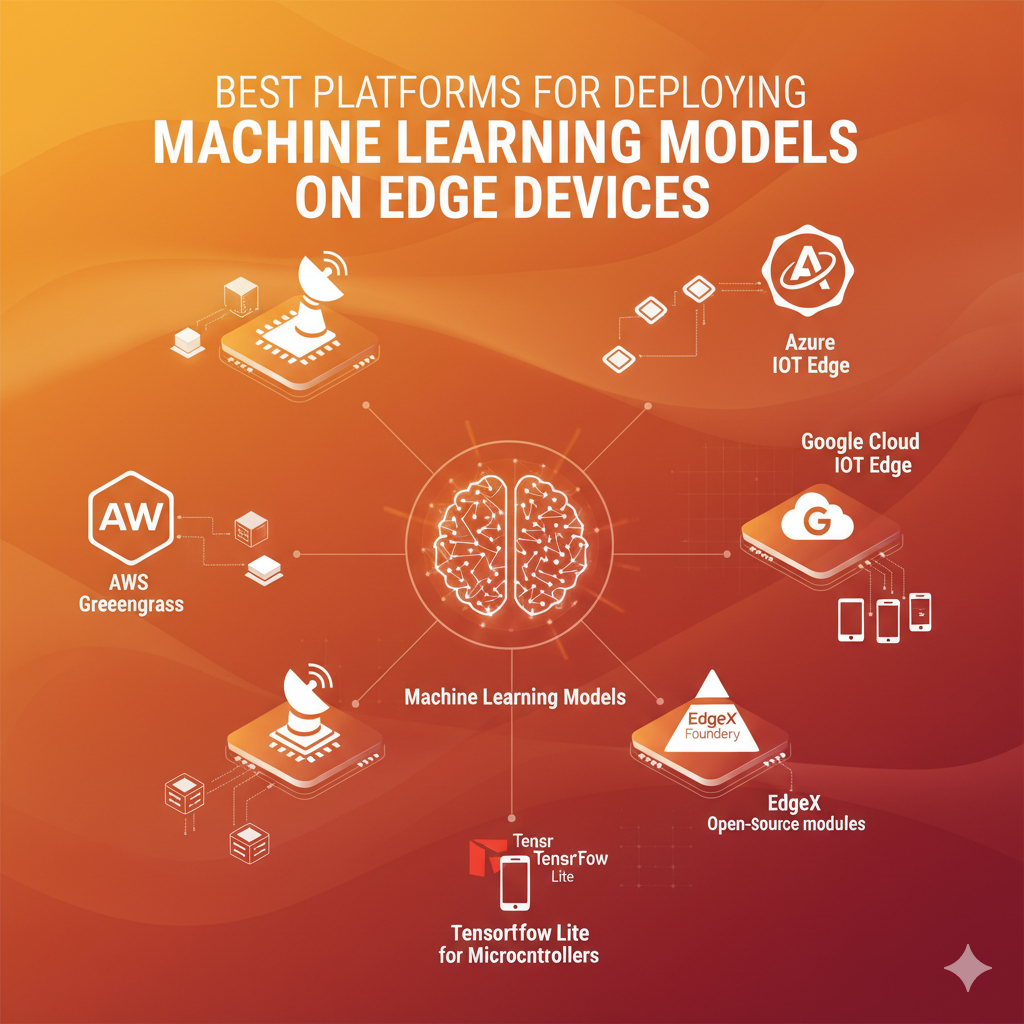

With that in mind, here are the best platforms for deploying ML models on edge devices in 2025.

1. TensorFlow Lite (LiteRT) — Best All-Rounder for Mobile and Embedded

Best For: Android/iOS, Raspberry Pi, ARM boards, and embedded Linux systems.

TensorFlow Lite (recently rebranded to LiteRT) is the go-to choice for deploying TensorFlow and Keras models on edge. It’s lightweight, reliable, and comes with excellent quantization tools to reduce model size and latency.

Why It’s Great

- Optimized for on-device performance.

- Works well with GPU, NNAPI, and Edge TPU delegates.

- Quantization-aware training for smaller, faster models.

- Has TensorFlow Lite for Microcontrollers for ultra-small devices.

Pros

- Mature ecosystem and community support.

- Minimal conversion friction if your model is already in TensorFlow.

- Built-in hardware acceleration options.

Cons

- Conversion from PyTorch or other frameworks adds extra steps.

- Limited support for very complex operators.

Use Cases

- On-device image classification.

- Pose estimation in fitness apps.

- Keyword spotting on IoT or microcontrollers.

2. PyTorch Edge (ExecuTorch) — Best for PyTorch Workflows

Best For: Mobile and embedded apps built from PyTorch-trained models.

If your research and training pipeline is already in PyTorch, you’ll love PyTorch Edge (ExecuTorch). It’s a new evolution of the old TorchScript approach, optimized for lightweight deployment and mobile integration.

Why It’s Great

- Keeps the entire workflow in the PyTorch ecosystem.

- Good performance with quantized and traced models.

- Integrates easily with Android and iOS build systems.

Pros

- Minimal code changes from training to deployment.

- Supports dynamic models.

- Constantly improving with each PyTorch release.

Cons

- Slightly heavier runtime than TensorFlow Lite.

- Still catching up in embedded support.

Use Cases

- Mobile NLP apps.

- Object detection using PyTorch models.

- On-device personalization models.

3. ONNX Runtime (Mobile & Edge) — Best for Portability

Best For: Teams working across multiple frameworks or devices.

ONNX Runtime is Microsoft’s cross-platform runtime that supports models exported from TensorFlow, PyTorch, Scikit-learn, and others. Its mobile and edge build options make it ideal when you want portability without being tied to a specific framework.

Why It’s Great

- Framework-agnostic: one format for all models.

- Supports multiple hardware accelerators (CPU, GPU, NNAPI, CoreML).

- Excellent documentation and integration with mobile toolchains.

Pros

- Extremely flexible and lightweight.

- Optimized for both Android and iOS.

- Broad community support.

Cons

- Conversion to ONNX may fail for custom operations.

- Requires careful provider setup for best performance.

Use Cases

- Unified SDKs across mobile and desktop.

- AI in cross-platform IoT devices.

- Edge analytics gateways.

4. Intel OpenVINO — Best for Intel Hardware and Vision Workloads

Best For: Edge gateways, PCs, and devices running Intel CPUs or GPUs.

If your edge hardware uses Intel chips, OpenVINO is hands down the best optimization toolkit available. It’s built to maximize inference speed using Intel’s CPUs, integrated GPUs, and VPUs like Movidius.

Why It’s Great

- Exceptional performance for computer vision models.

- Provides a model optimizer that shrinks models intelligently.

- Supports deployment across Intel CPUs, iGPUs, and AI accelerators.

Pros

- Robust tooling and performance profiling.

- Free and production-ready.

- Works seamlessly with popular frameworks (TF, PyTorch, ONNX).

Cons

- Mostly limited to Intel ecosystem.

- Slight learning curve for setup and optimization.

Use Cases

- Smart CCTV and video analytics.

- Industrial automation.

- Real-time object detection on Intel-based edge devices.

5. NVIDIA TensorRT and Jetson — Best for GPU-Accelerated Edge

Best For: AI robotics, autonomous systems, and heavy vision inference.

When speed and throughput matter, TensorRT and the Jetson platform are unbeatable. TensorRT optimizes deep learning models for NVIDIA GPUs, delivering lightning-fast inference with FP16 and INT8 precision.

Why It’s Great

- High performance and throughput.

- Works perfectly with the Jetson ecosystem (Nano, Xavier, Orin).

- TensorRT-LLM for large model inference on GPUs.

Pros

- Excellent optimization for GPU workloads.

- Mature tools and profiling capabilities.

- Ideal for high-end edge AI.

Cons

- More expensive hardware.

- Higher power consumption compared to CPU-based devices.

Use Cases

- Robotics and autonomous drones.

- Multi-camera edge analytics.

- Edge AI servers with GPUs.

6. Apple Core ML — Best for iOS and Apple Devices

Best For: On-device AI experiences on iPhone, iPad, and macOS.

Core ML is Apple’s native machine learning framework optimized for the Apple Neural Engine (ANE). It’s the best way to run models on iPhones efficiently.

Why It’s Great

- Seamless integration with Xcode and Swift.

- Optimized for Apple’s chips and Neural Engine.

- Privacy-friendly, since models run locally.

Pros

- Exceptional performance on Apple hardware.

- Easy conversion from PyTorch or TensorFlow using Core ML Tools.

- Supports on-device personalization.

Cons

- Only works within Apple’s ecosystem.

- Requires conversion to

.mlmodelformat.

Use Cases

- Face detection, text prediction, and AR apps.

- Personalized recommendations in iOS apps.

- Offline inference on macOS applications.

7. Edge Impulse and TinyML Toolchains — Best for Microcontrollers

Best For: Ultra-low-power IoT and sensor devices.

Edge Impulse is a complete end-to-end platform for building ML models on microcontrollers and constrained devices. It simplifies everything from data collection to model deployment.

Why It’s Great

- Handles TinyML workflow from data to deployment.

- Compatible with many MCUs and SDKs.

- Built-in tools for signal processing and quantization.

Pros

- Extremely lightweight.

- Great for hobbyists, startups, and industrial IoT.

- Works even on 32 KB RAM devices.

Cons

- Limited to small models and basic tasks.

- Not suitable for heavy computer vision workloads.

Use Cases

- Motion and vibration anomaly detection.

- Wake word detection.

- Predictive maintenance sensors.

8. Vendor SDKs — When You Need Hardware-Specific Optimization

In some cases, using vendor-provided SDKs gives you the best hardware utilization:

- Qualcomm AI SDK (SNPE) — optimized for Snapdragon DSP/NPU.

- Arm NN & Compute Library — best for ARM-based processors.

- Google Coral Edge TPU Runtime — perfect for USB/PCIe edge accelerators.

These SDKs are highly efficient but lock you into specific hardware ecosystems.

Practical Edge Deployment Workflow I Follow

Here’s the step-by-step approach I personally use for deploying ML models on edge devices:

- Pick Your Target Hardware Early: Benchmark early; edge performance varies drastically.

- Train in Your Preferred Framework: PyTorch or TensorFlow whichever suits your workflow.

- Optimize the Model: Use quantization or pruning to reduce model size and inference time.

- Convert for Deployment: Convert to TFLite, ExecuTorch, ONNX, or OpenVINO formats.

- Profile on Device: Test latency, power consumption, and memory usage directly on the hardware.

- Iterate and Re-optimize: Adjust architecture or runtime if constraints aren’t met.

- Deploy and Monitor: Package with runtime dependencies and set up OTA model updates.

Tips I’ve Learned from Real Projects

- Always test on real hardware: Emulator benchmarks are misleading.

- Quantization trade-offs: INT8 quantization can cause slight accuracy drops — test it.

- Avoid unsupported operators: Simplify your architecture for smoother conversions.

- Use hardware delegates: NNAPI, Core ML, or TensorRT make a huge difference.

- Measure power, not just speed: Edge means battery; efficiency matters more than raw FPS.

How to Choose the Right Platform (Quick Decision Guide)

| Use Case | Best Platform |

|---|---|

| Android/iOS apps | TensorFlow Lite / Core ML |

| Cross-framework portability | ONNX Runtime |

| PyTorch-based apps | PyTorch Edge (ExecuTorch) |

| Intel-based edge PCs | OpenVINO |

| GPU-powered edge AI | NVIDIA TensorRT / Jetson |

| Tiny IoT or MCU devices | TensorFlow Lite Micro / Edge Impulse |

Wrapping Up

Edge AI is the future and it’s moving fast. The days of sending every inference to the cloud are over. Whether it’s detecting objects in real-time, processing audio locally, or running smart sensors in factories, deploying ML models on the edge unlocks speed, privacy, and scalability.

But don’t just pick a tool because it’s popular choose based on your model, your hardware, and your deployment goals.

If I had to summarize it:

- TensorFlow Lite (LiteRT) for general edge deployment.

- PyTorch Edge (ExecuTorch) for PyTorch-native projects.

- ONNX Runtime for maximum flexibility.

- OpenVINO and TensorRT for performance-critical use cases.

- Edge Impulse for embedded and sensor-based ML.

The edge ML space is maturing fast and these platforms are making it practical to run AI anywhere.